Sunday, October 6, 2024

Revolutionizing Machine Learning with a Decentralized Federated Learning Platform

In today’s digital landscape, the need for privacy-preserving, scalable, and decentralized machine learning solutions is more critical than ever. With vast amounts of data distributed across different sources, centralized AI models face challenges related to privacy, data security, and the cost of data transfer. The advent of decentralized federated learning offers a cutting-edge solution to these problems.

At Kalman Labs, we are building a Decentralized Federated Learning Platform that leverages the power of Web3.0, IPFS (InterPlanetary File System), and blockchain smart contracts to create a secure, scalable, and collaborative machine learning ecosystem. This platform addresses some of the most pressing challenges in AI by combining blockchain’s security with federated learning’s collaborative approach, all while preserving user privacy.

Why Decentralized Federated Learning?

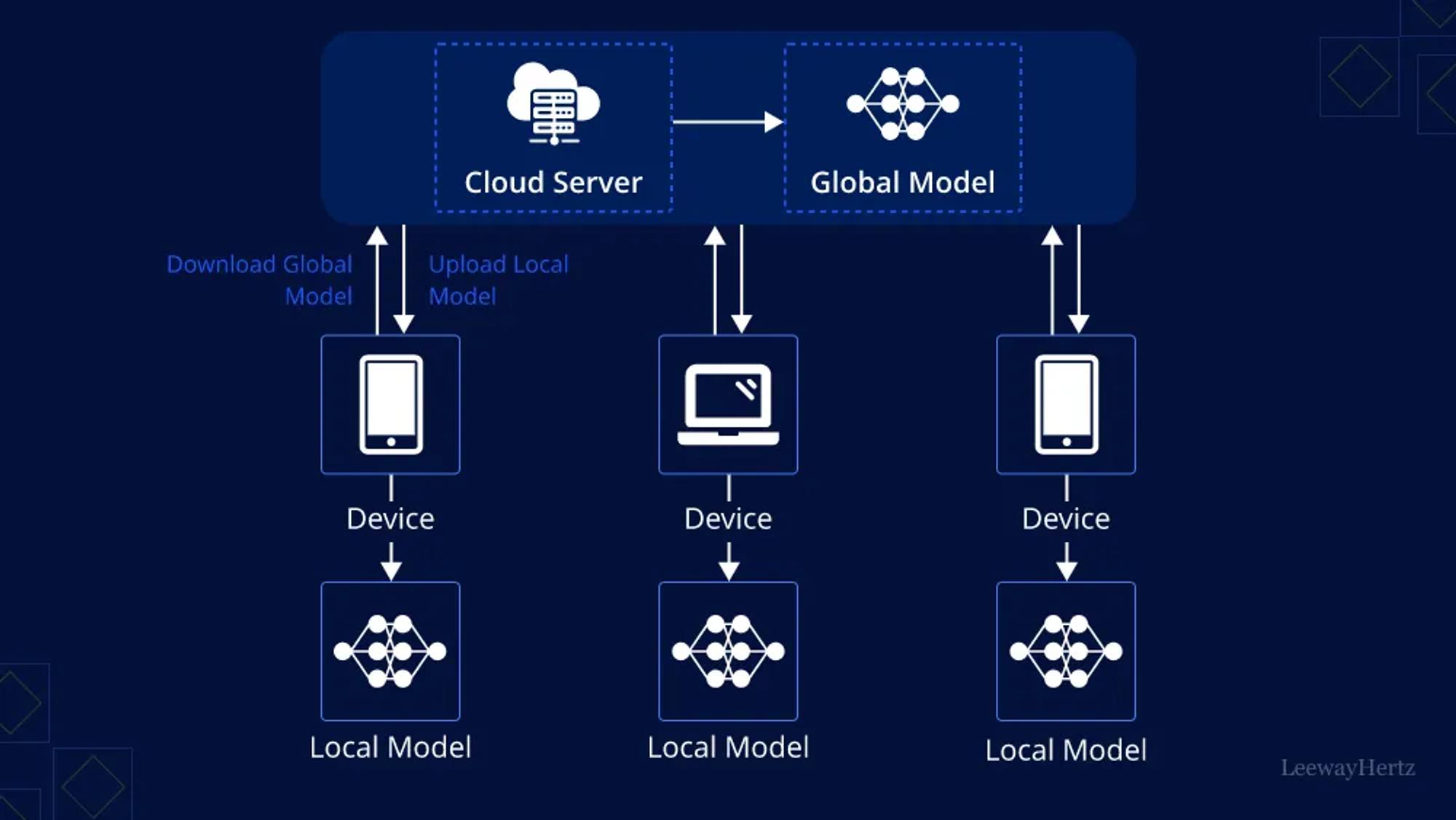

Federated learning has become a hot topic in machine learning due to its unique capability to train models across multiple decentralized devices without the need to share raw data. Each node, or device, in the system computes updates based on its local data and shares the updates (not the data) with a central aggregator to improve the global model. However, traditional federated learning still suffers from central points of vulnerability in terms of aggregation and governance.

The solution lies in decentralization, which removes the dependency on a central authority and enhances security, scalability, and collaboration. Our platform aims to bring Web3.0 technologies into federated learning, creating a more secure, transparent, and autonomous system where participants can collaborate without ever sharing raw data.

Key Features of Our Decentralized Platform

1. Blockchain Smart Contracts for Trustless Coordination

In our platform, smart contracts serve as the backbone for decentralized coordination. They handle user registration, manage data reference storage via IPFS, and initiate federated learning processes. The smart contract also aggregates model updates using the Federated Averaging (FedAvg) algorithm, ensuring that the learning process is transparent and tamper-proof. Additionally, smart contracts provide an optional incentive mechanism that rewards participants based on their contributions and reputation.

2. IPFS for Decentralized Data Storage

Data privacy is a top priority. By leveraging IPFS, the platform decentralizes data storage, meaning that user data is never stored in a centralized location. Instead, data is uploaded to IPFS, and only content identifiers (CIDs) are shared. These CIDs act as references that worker nodes use to retrieve the relevant training data, preserving user privacy while enabling effective machine learning.

3. Decentralized Model Aggregation

Worker nodes on the platform perform local data processing, train machine learning models locally, and share the model updates (not the data) with the smart contract. This removes the need for a centralized aggregator, ensuring the process is distributed, secure, and fault-tolerant. Using FedAvg, the smart contract aggregates updates from multiple nodes to improve the global model.

4. Privacy-Preserving Local Training

Each worker node handles data preprocessing, local training, and the computation of model updates independently. This ensures that raw data remains with the user, providing a strong layer of data privacy while allowing participation in collaborative learning.

Step-by-Step Implementation Flow

- User Registration:

Users connect to the platform using Web3 wallets and upload their data to IPFS. The platform stores the associated CIDs via the smart contract for future access. - Training Initialization:

A user or automated process initiates a new training round by calling a smart contract function. The smart contract then broadcasts this message to all registered worker nodes, prompting them to start local model training. - Local Training:

Worker nodes retrieve the global model from the smart contract, access the required data from IPFS, and perform local model training. The resulting model updates are then shared with the smart contract. - Model Weight Aggregation:

Using the FedAvg algorithm, the smart contract aggregates the model updates from all worker nodes to compute a global model update, which is stored on the blockchain. - Global Model Broadcast:

Once the model is updated, the smart contract broadcasts the updated global model back to all worker nodes, enabling them to apply the changes to their local models and continue training.

Incentivizing Participation

To encourage participation and ensure the quality of contributions, the platform incorporates an incentive mechanism. Participants can be rewarded based on their contributions to the training process, reputation scores, or the quality of their model updates. This makes the system not only collaborative but also rewarding for those who contribute effectively.

Security, Scalability, and Privacy Considerations

Security is at the forefront of our decentralized platform. Communication between worker nodes and the smart contract is secured using encryption protocols, ensuring that data and model updates cannot be tampered with. Blockchain ensures that all transactions, updates, and interactions are immutable and transparent.

The platform is designed to scale, supporting a large number of worker nodes. By distributing data storage across IPFS and model training across decentralized nodes, we avoid the bottlenecks and single points of failure associated with traditional centralized systems.

Privacy is a key feature. Our use of differential privacy techniques and encryption guarantees that sensitive data never leaves user devices, preserving user privacy while enabling collaborative learning.

A New Paradigm for Machine Learning

By decentralizing federated learning, we are creating a system where privacy, security, and scalability come together. Whether it’s healthcare, finance, or IoT, industries that rely on privacy-preserving machine learning models stand to benefit from our platform. This system opens up new avenues for collaborative, secure, and decentralized AI development without compromising data privacy.

At Kalman Labs, we believe that the future of AI lies in decentralization, and we are proud to be at the forefront of this movement. Our Decentralized Federated Learning Platform is set to revolutionize how we train machine learning models, making AI not only more secure but also more inclusive.

Join us on this journey to build a more decentralized, privacy-first future for AI!