Tuesday, September 24, 2024

Building Personalized AI Language Models Using Brain Data

Introduction to Personalized AI

Artificial Intelligence (AI) has rapidly evolved in recent years, particularly in natural language processing (NLP). AI systems like GPT-3 are now capable of generating text that is coherent, contextually accurate, and, in many ways, indistinguishable from human writing. However, these systems are generalized. They process language based on large datasets but do not consider individual differences in how users think, process, or interpret information.

Personalized AI aims to change this by adapting to the unique cognitive profiles of users. By integrating brain data into AI models, we can create systems that tailor their responses and behaviors to each individual. Imagine an AI that knows how your brain works and adjusts its interactions to better suit your personal preferences and cognitive abilities.

This blog will explore how brain data from neuroimaging can be used to create personalized AI models, focusing on the process of combining neuroscience with AI and the challenges we face along the way.

The Concept of Personalization in AI

Before diving into the specifics of integrating brain data, it’s important to understand what “personalization” in AI means. Traditional AI systems are designed to handle tasks in a uniform manner, applying the same rules and models to all users. While this works well for general tasks, it doesn’t account for the individual differences that exist in human cognition.

For instance, everyone processes language differently. Some people might be visual thinkers, associating words with images, while others might rely on logical reasoning or emotional cues to understand a sentence. A personalized AI model would take these differences into account, making the interaction more natural and effective for each user.

Personalization can happen in different forms:

- Language Adaptation: Tailoring the way the AI speaks or responds based on the user’s preferred style of communication.

- Cognitive Style Recognition: Understanding whether a user is more detail-oriented or prefers broader summaries and adjusting responses accordingly.

- Behavioral Customization: Adapting interactions based on past behavior, learning from how the user engages with the AI over time.

But how do we achieve this level of personalization? The key lies in understanding the user’s brain and incorporating that information into the AI.

Integrating Brain Data into AI Models

To create personalized AI models, the first step is to gather detailed information about the user’s brain. This is where neuroimaging comes into play. As we discussed in Blog 1, neuroimaging techniques like MRI and fMRI provide us with detailed maps of the brain’s structure and function. The data from these images can then be used to shape how the AI model behaves.

Let’s walk through the process of integrating brain data into AI models.

1. Collecting Brain Data through Neuroimaging

The first step is to collect brain data using neuroimaging techniques like MRI or fMRI. The MRI scan provides us with structural data, such as cortical thickness, surface area, and the size of different brain regions. Functional MRI can give us insight into how different parts of the brain activate during tasks like reading or problem-solving.

- Cortical Thickness: As discussed earlier, the thickness of the cortex (the outer layer of the brain) varies from person to person and is related to cognitive abilities. People with thicker cortices in certain areas might have stronger abilities in tasks like memory recall or language processing.

- Functional Activation Patterns: fMRI data can reveal how different parts of the brain are engaged during specific activities, such as reading, speaking, or making decisions.

This data provides the foundation for personalizing an AI model because it offers a glimpse into how an individual processes information.

2. Preprocessing Brain Data

Once the neuroimaging data is collected, it needs to be preprocessed. Preprocessing is a series of steps that clean and prepare the data for analysis. These steps involve removing unnecessary information (like the skull), correcting for any distortions in the images, and extracting relevant features like cortical thickness or brain region volumes.

Key Preprocessing Steps:

- Skull Stripping: Removing non-brain tissue from the images so that only the brain structure is analyzed.

- Normalization: Ensuring that the images are standardized so that comparisons between different brains can be made accurately.

- Segmentation: Dividing the brain into different regions to allow detailed analysis of specific areas like the hippocampus (important for memory) or the prefrontal cortex (involved in decision-making).

By the end of this preprocessing phase, we have a clear and clean dataset that represents the individual’s brain in a format that can be fed into an AI model.

Building Neural Models from Brain Data

Once we have clean neuroimaging data, the next step is to build computational models that represent the individual’s brain. This is done using techniques from computational neuroscience, which allows us to simulate how neurons and brain networks function.

1. Modeling Individual Neural Networks

In this step, the brain data is used to create models that simulate how neurons in different brain regions are connected and communicate. These models aim to replicate the unique neural architecture of the individual’s brain. For example, if the neuroimaging data shows that a particular region of the brain is more developed or has more connections, this will be reflected in the model.

Key Steps in Neural Modeling:

- Neural Network Construction: Creating a virtual representation of the brain’s networks based on the connectivity between neurons and brain regions. For example, using DTI data to map the white matter tracts.

- Parameterization: Extracting key parameters from the neuroimaging data, such as synaptic strengths (how strongly neurons are connected), conduction delays (how fast information travels between regions), and cortical thickness.

By building an individualized neural network model, we gain insights into how a person’s brain works and can begin to translate this into AI behaviors.

2. Linking Brain Data to Cognitive Functions

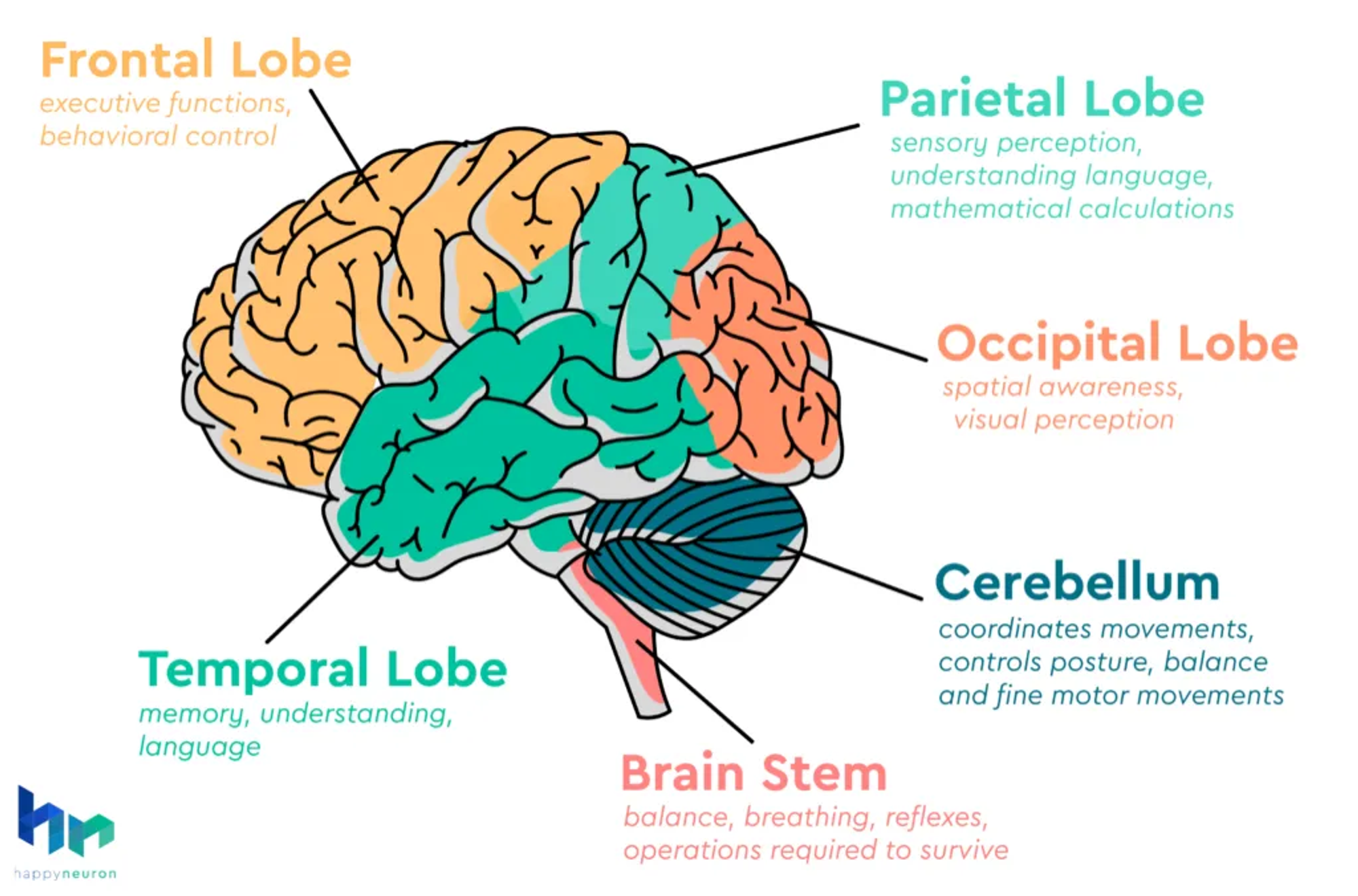

After constructing the neural model, the next step is to correlate specific brain structures with cognitive functions. We know that different regions of the brain are responsible for different tasks. For instance, the occipital lobe processes visual information, while the prefrontal cortex is responsible for decision-making.

Using machine learning algorithms, we can establish relationships between the brain’s structure and the cognitive tasks that it performs. For example, if a person has a thicker prefrontal cortex, the AI model might predict that they are better at tasks involving complex reasoning and decision-making. This allows us to map the brain’s physical attributes to specific cognitive styles, which will shape how the AI interacts with the user.

Integrating Brain Data into AI Language Models

Now that we have a neural model that represents the individual’s brain, the next step is to integrate this data into an AI language model. This is the core of building personalized AI — translating brain structures and cognitive profiles into AI behaviors.

1. Mapping Neural Data to AI Parameters

The AI model’s architecture consists of several parameters that control how it processes language and generates responses. These parameters include the size of the layers, the attention mechanisms (how much focus the model gives to different parts of a sentence), and the learning rate (how quickly the model adapts during training).

By analyzing the brain data, we can map certain brain features to these AI parameters. For example, if the brain data shows strong connectivity in the language areas (such as Broca’s and Wernicke’s areas), the AI model can be adjusted to enhance its focus on syntactic structure and grammar.

2. Fine-Tuning the AI Model with Transfer Learning

Once the AI model’s parameters have been personalized based on the brain data, the next step is to fine-tune the model using a technique called transfer learning. Transfer learning allows us to take a pre-trained AI model, like GPT-3, and adapt it using new data (in this case, the brain data).

During the fine-tuning process, the AI model learns to adjust its behavior based on the individual’s cognitive profile. For example, if the brain data indicates that the person has strong visual processing skills, the AI could adapt to provide more visual descriptions or metaphors in its responses.

This process results in an AI system that behaves in a more personalized way, tailoring its responses to the individual’s unique brain structure.

Challenges in Building Personalized AI Models

While the idea of using brain data to create personalized AI models is exciting, it also comes with several challenges. These challenges must be addressed to ensure that the technology is ethical, efficient, and effective.

1. Data Complexity

The human brain is one of the most complex systems in the universe, with over 86 billion neurons and trillions of connections. Understanding and modeling this complexity is a massive challenge. Even with advanced neuroimaging techniques, we are only scratching the surface of how the brain works. Simplifying this complexity into parameters that an AI can use is no easy task.

Moreover, brain data is highly individualized. What works for one person may not work for another, which makes it difficult to generalize findings. This complexity means that developing a truly personalized AI model requires vast amounts of data and computational power.

2. Ethical and Privacy Concerns

Using brain data to personalize AI raises important ethical questions. Brain data is highly personal and sensitive, containing information about a person’s cognitive abilities, mental health, and even personality traits. Ensuring that this data is protected and used responsibly is critical.

There are also concerns about consent. Users must fully understand how their brain data is being used, who has access to it, and what it will be used for. Regulations like the Health Insurance Portability and Accountability Act (HIPAA) and the General Data Protection Regulation (GDPR) are designed to protect personal data, but implementing these safeguards in the context of AI and neuroimaging is complex.

3. Computational Power

Personalizing AI with brain data requires enormous computational resources. Neuroimaging data is large and complex, and processing it in real-time for integration with AI systems demands significant computational power. While advancements in cloud computing and hardware are helping, this remains a bottleneck in developing scalable personalized AI systems.

Real-World Applications of Personalized AI

Despite these challenges, the potential applications of personalized AI models are vast and exciting. Here are a few key areas where this technology could have a profound impact:

1. Healthcare

Personalized AI systems could revolutionize healthcare by offering tailored medical advice and treatment plans. For instance, an AI system that understands a patient’s unique cognitive profile could provide more relevant health information, improving the patient’s ability to follow through with treatments. It could also adapt to better communicate with patients suffering from cognitive impairments, such as dementia or traumatic brain injuries.

Additionally, in mental health care, personalized AI could provide more accurate diagnoses and treatment plans by analyzing brain data. For example, it could detect patterns in brain activity that signal depression or anxiety and suggest personalized interventions.

2. Education

In education, personalized AI could be used to create adaptive learning platforms that adjust to a student’s cognitive style. For example, if a student has a strong visual processing ability, the AI could present more visual aids, like charts and diagrams, to enhance their learning experience.

Personalized AI tutors could also adapt to a student’s pace, providing more challenging material for advanced learners or simplifying content for those who need extra support. By understanding how a student’s brain works, these AI systems could optimize learning outcomes and make education more inclusive.

3. Human-Computer Interaction

Personalized AI systems could improve the way people interact with technology. By understanding the user’s cognitive style, the AI could adjust its behavior to better match the user’s preferences. This could lead to more intuitive interfaces, making technology more accessible to people with varying cognitive abilities.

For example, people with cognitive impairments might benefit from an AI system that provides slower, more detailed explanations, while users who process information quickly could receive more concise responses. Personalized AI could also be used in customer service, tailoring interactions based on the customer’s preferences and past behavior.

Conclusion

The integration of neuroimaging and AI offers exciting opportunities for creating personalized AI systems that can adapt to individual users’ cognitive styles. By using data from MRI, fMRI, and DTI scans, we can build detailed models of the brain that help shape AI behavior. While there are challenges in terms of data complexity, ethics, and computational power, the potential applications in healthcare, education, and human-computer interaction are immense.

Personalized AI systems could revolutionize the way we interact with technology, offering more natural, responsive, and effective solutions. As technology advances, we may soon live in a world where AI systems can truly understand and adapt to our unique ways of thinking.